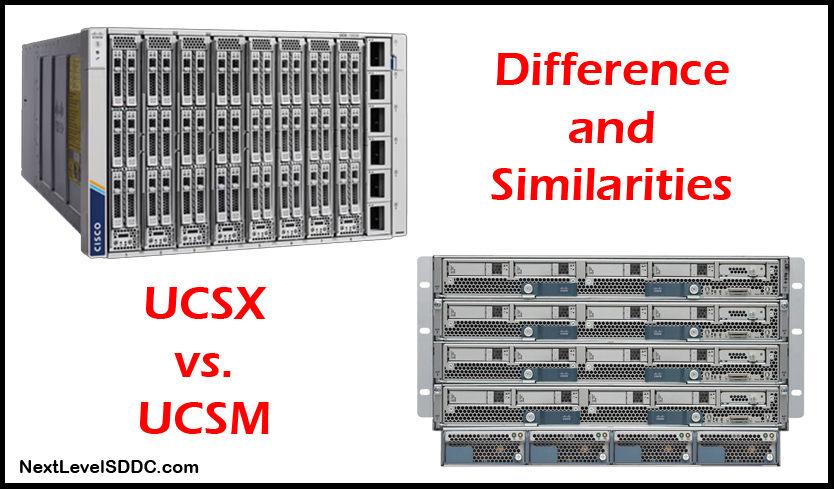

The new UCSX chassis follows the same design as its predecessor by combining computing, networking, storage access, and virtualization into a single cohesive platform, but with some minor tweaks in order to support newer technologies.

Some of the main differences include:

- UCSX is configured and managed with Intersight, while the prior UCS design used UCS Manager

- Intersight is a SaaS offering by Cisco, that can also be configured on premise if desired

- UCS Manager was the initial software offering for UCS, and that was offered on-premise only

- There remains three profile types available and those are: Domain, Chassis and Server profiles

- UCSX houses the blades vertically while the previous UCS houses them horizontally (both can fit 8 blades)

- UCSX has no midplane in the design, therefore the blades connect directly to the IFMs

- Intersight is all policy based. Every setting is a policy, which helps keep everything standardized on big environments, but can also get confusing if you have too many different use cases, clusters, etc

- Intersight also allows the option to configure servers/nodes ahead of time. This will allow admins to create the required service profiles ahead of time and the nodes will self-configure once the nodes are registered in the chassis

- What happens if Intersight or Internet is down? How can you access your environment?

- Luckily the Fiber Interconnects can be accessed on the local network, so worst case scenario, you can still access the server KVMs even if Intersight (Saas or on-premise) is not accessible

- UCSX requires 7RU of space, while the previous UCS chassis only required 6RU

- UCSX requires 6x 2800W PSUs while the previous UCS chassis only requires 4x 2500W PSUs

- IO Modules are replaced in the UCSX with IFMs (Intelligent Fabric Modules). More bandwidth

What about node differences? Should I get M6 or M7 nodes? It depends…

- For example, M7 nodes require DDR5 memory which is still a bit pricey compared to DDR4.

If your applications can truly take advantage of the extra memory speed, then by all means go for it.

If they do not, stick with M6 which instead supports DDR4 memory and save a few bucks. - M7 nodes also support 4th Gen Intel Xeon Scalable CPUs, while the M6 nodes only support 3rd Gen Intel CPUs

- M7 nodes support 100/200GbE throughput per node (VIC 1500 cards), while the B200 M6 nodes by default can support up to 40GbE each (VIC 1440). M6 can support higher throughput with other VIC 1400 series cards available.

- Do you have AI requirements? M7 nodes support GPUs while the B200 M6 nodes do not

- M7 blades can handle up to 6x disks, while the B200 M6 blades can only handle up to 2x disks (X210c M6 can handle 6 too)