What if I told you that you can leverage GPUs with dynamic memory allocation over the network with bare metal performance?

On top of that, you can even get around the current lead time issues being experienced with some of the higher end models of the Nvidia cards (A100 and H100 mainly) while saving some cost? Does the same performance for lower cost/lead times sound interesting to you?

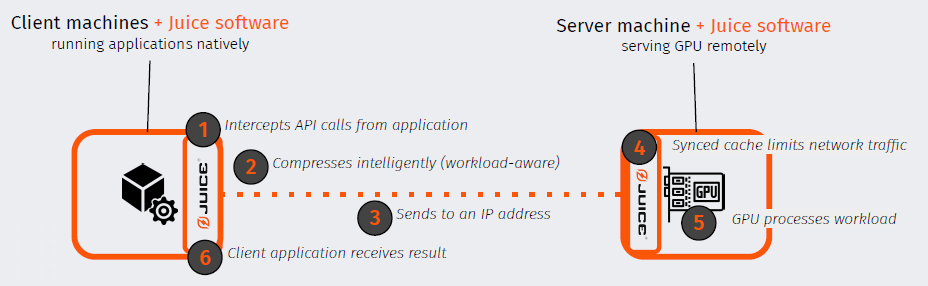

That is what Juice Labs has been working on for a few years now…Virtualization for GPUs, Exactly of what VMware vSphere did for servers around 15 years ago. Utilize your GPUs at full capacity not just where they are installed physically at, but where they are needed while you reduce spend and increase flexibility.

- GPU-over-IP with Bare Metal Performance (Client and server see the workload as local, no need for reconfiguration)

- Dynamic GPU Utilization (Share GPUs with multiple applications remotely and allocate resources dynamically)

- GPU Composability (Pool and scale GPUs independently from CPUs)

Several configurations possible with this technology:

- Intra-Datacenter Connect

- Freely move GPUs around server instances within a datacenter, over network.

- Inter-Region Connect

- Attach GPUs to server instances across regions

- Inter-Cloud, Inter-Region Connect

- Attach GPUs to server instances from both other Clouds (public and private) and other regions.

- Hybrid Cloud Connect

- Easily flex capacity between cloud and on prem compute, get more GPU when and where you need it.

- Edge Node Connect

- Bring GPU to your edge, on demand. Flex up to more powerful GPUs as needed.

What are your thoughts? Is this cool tech? Do you have an immediate use case for it? Would you like to know more?

Official Juice Labs website here