Are you a VMware shop and you are currently using Pure Storage? In case you missed their best practices guide, I wanted to provide you with a simple command to apply these settings to an entire ESXi cluster at once (no reboot, zero downtime required).

First of all, what am I talking about here? VMware ESXi by default uses round robin for its storage path selection, and by default it waits to switch paths once 1000 IOPS are completed, which is okay, however, Pure’s recommendation is to lower this value to 1 so it can alternate paths after every IOPS.

Performance: You are most likely not going to notice a difference unless you were maxing out one of the paths already, however, after making the change you will basically able to push twice the amount of bandwidth as before since you are now alternating between both (or more) paths constanly!

Failover Time: In case of a hardware failure occurs on one of the ports (HBA, twinax cable, etc) , the path will be removed from the rotation immediately instead of having to wait 10 seconds otherwise.

Storage Controller Balance: This setting will help you to leverage both controllers on the storage side as well and keep the load balanced. Whenever OS upgrades take place, a controller is unavailable for several minutes while it reboots. In order for this process to be seamless, the failover needs to happen as soon as possible to make sure there are no timeouts when requesting the data.

Are you convinced that reducing your round robin default IOPs is the way to go yet?

Awesome! On to the good part then…how do I change this setting?

If you want to apply the setting one host at a time, you can…

- Enable SSH on the host

- Establish an SSH session with the host

- Login as root

- Execute the following command on the single host

esxcli storage nmp satp rule add -s "VMW_SATP_ALUA" -V "PURE" -M "FlashArray" -P "VMW_PSP_RR" -O "iops=1" -e "FlashArray SATP Rule"- or you can apply to the entire cluster (or vCenter too!)

- Execute the script from any server with Powershell / PowerCLI installed, and access to the vCenter

$login = Get-Credential

Connect-VIServer -Server vcenter.domain.local -Credential $login

$hosts = Get-Cluster "vCenter-ClusterName" | Get-VMHost

foreach ($esx in $hosts)

{

$esxcli=get-esxcli -VMHost $esx -v2

$PureNMP = $esxcli.storage.nmp.satp.rule.remove.createArgs()

$PureNMP.description = "Pure Storage Flash Array SATP Rule"

$PureNMP.model = "FlashArray"

$PureNMP.vendor = "PURE"

$PureNMP.satp = "VMW_SATP_ALUA"

$PureNMP.psp = "VMW_PSP_RR"

$PureNMP.pspoption = "iops=1"

$esxcli.storage.nmp.satp.rule.add.invoke($PureNMP)

}

- Proceed to create new Datastores on the Pure Flasharray and this setting will by automatically applied

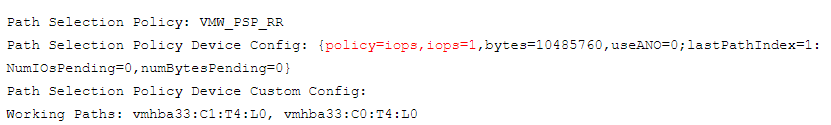

- Make sure to validate the new settings took place with the following command

- Command:

esxcli storage nmp device list - Check the new datastore on the FlashArray for the newly created rule settings there:

What if you created the datastores before the SATP rule? No problem!

- Execute the following command on the same SSH session to modify the datastore settings

- Command:

for i in esxcfg-scsidevs -c |awk '{print $1}' | grep naa.xxxx; do esxcli storage nmp psp roundrobin deviceconfig set --type=iops --iops=1 --device=$i; done

- Where, .xxxx matches the first few characters of your naa datastore IDs.

- Validate the settings took place with the same command we used before

- Command:

esxcli storage nmp device list - No need to restart the host for the changes to take effect

That is it. Hopefully you found this information useful and you can now squeeze every last bit of performance our of your array! I will write some instructions soon explaining how to confirm that all your hosts have a balanced connection to your FlashArray controllers (very close amount of IOPS per path).

More information on creating/deleted a Pure SATP rule here

More information on modifying datastore settings here

Pingback: Pure Storage FlashArray - Is your bandwidth balanced? - Next Level SDDC