What started as a Linux VM with disk partition out of space, quickly escalated to the application going down, what did you operations guys do?! No worries, let me just roll up my sleeves and dig a bit deeper so we can understand how increasing the disk, then rebooting the server broke your application. I took this as a learning opportunity, instead of deflecting the issue away since I was confident the disk expansion was not the root cause of the issue. Even though I am mostly engaged with pre-sales conversations, I hope I never become that guy that does not want to help simply because it is not my job…Oddly enough, I still enjoy the operations work from time to time.

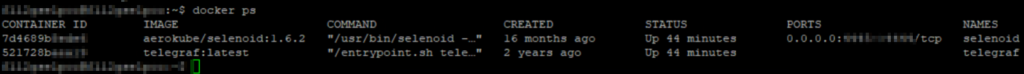

First thing I did was leverage google to put in front of me some basic docker commands, and the first one I found was docker ps, which lists containers.

cli > docker ps

I could see 2 containers running already for 44 minutes, which was the last time this VM was rebooted in order to complete the disk expansion. Odd enough, the grid was still offline when trying to access it through a browser. No big deal, you know what? Let me SSH into a second container and review to see if there are any differences. Nope, other than uptime being longer, same 2 containers were running on this other instance as well.

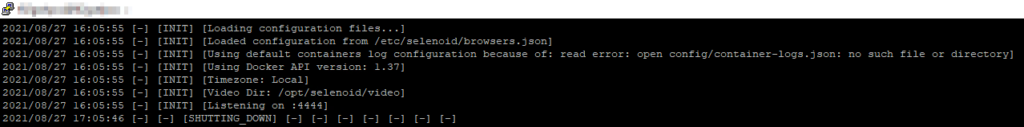

Ok, time to review the logs. Let’s see which command can help with that.

cli > docker logs applicationname

I could clearly see an error during the initialization phase so I though I was onto something here, so I started by looking up the file that it was trying to open/find (container-logs.json), which did in fact not exist. Well, that could certainly explain why we are having issues, I thought!

Let me just just copy the file from another instance and that should fix the issue. Wrong! The file did not exist in the other instances as well, and that same error was there on the logs, however, it was not the root cause of the issue since the error was there last time the grid was working as expected.

So what now? Logs are not providing any other clues and everything looks fine from a high level, so what else could be causing this issue? We have to be missing something on this one instance.

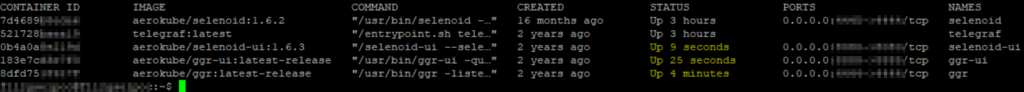

After reading some more about docker and how to troubleshoot it, I ran into a different command that would also list all containers in the instance so I gave it a try.

cli > docker container ls -a

Guess what? I could see 3 other container instances that were stopped on the master instance. Makes sense since these UI containers were only configured on the master node and not the others (I did not know that though!).

Let’s start them manually and test again…

Voila! Console was now accessible via browser and the entire grid was back online.

Command to start them:

cli > docker start applicationname

Now that everything was back up, I also wanted to prevent this from happening ever again, specially after a simple reboot situation, therefore I researched how to update the container startup settings and applied the changes accordingly shortly after.

Command to automatically start/restart the container unless manually stopped:

cli > docker update --restart unless-stopped applicationname

What a rollercoaster this issue was…on a Friday afternoon, before getting the weekend started, with a tons of other stuff to get completed before then as well. However, so glad I took ownership of it, learned something new and was able to not only fix it, but to also apply a fix to avoid running into the same issue again in the future.

After that was all done, I was able to get my weekend started feeling like a boss!